Robotics and AI

Robo-Brush: Autonomous Toothbrushing with Deep Reinforcement Learning and Learning from Demonstration

Oral hygiene is a daily necessity, yet for individuals with limited mobility, toothbrushing can be difficult or impossible without assistance. Robo-Brush addresses this challenge by combining Deep Reinforcement Learning (DRL) with Learning from Demonstration (LfD) to teach a robotic arm how to brush teeth both thoroughly and safely. Using a high-fidelity MuJoCo simulation with anatomically detailed dental models, the system learned human-like brushing strategies that balanced coverage with gentle force. Results showed that adding just a small amount of demonstration data improved brushing coverage by over 6%, while also producing smoother, more natural motions. This work highlights the potential of intelligent, learning-enabled robots to take on delicate personal care tasks in assistive healthcare.

|Publication| |Video|

Real-Time Rehabilitation Tracking by Integration of Computer Vision and Virtual Hand

Accurate tracking of hand rehabilitation progress is often limited to costly clinical equipment and in-person assessments. To make this process more accessible, we developed a framework that combines computer vision and physics-based simulation to estimate finger joint dynamics in real time. Using a single camera, MediaPipe extracts hand landmarks, which are mapped to the MANO hand model in PyBullet. A PID controller, optimized with genetic algorithms, computes the torques at the MCP and PIP joints, enabling precise tracking of finger movement and force generation. Validation against experimental exoskeleton data confirmed high accuracy, with torque errors as low as 10⁻⁵ (N·m)². By quantifying hand joint dynamics through vision alone, this approach provides a scalable path for tele-rehabilitation, prosthetic design, and adaptive robotic hand control, helping bridge the gap between clinical precision and at-home accessibility.

|Publication| |Video|

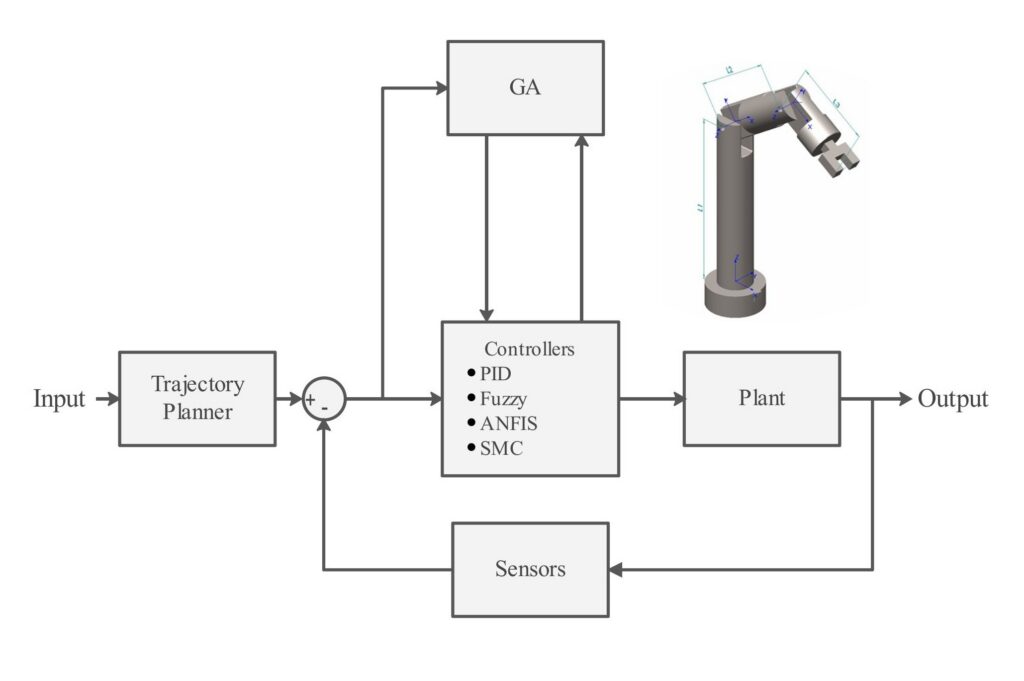

GA-Based Optimization of Control Strategies for a 3R Nonplanar Robotic Manipulator

This study presents a simulation of a 3R nonplanar robotic arm, incorporating sinusoidal trajectory planning and Gaussian noise. Four control strategies are implemented: PID, Fuzzy Logic, ANFIS, and Sliding Mode Control. The parameters of these controllers are optimized using a Genetic Algorithm (GA), and a moving average filter is applied to mitigate the effects of noise. The performance of these controllers is evaluated in a real-world simulation under identical conditions, allowing for a detailed comparison of their effectiveness.

Flexibot

A continuum robot is developed with 8 degrees of freedom (16 actuators) with computer vision as the sensor. The design of this robot is inspired from nature, particularly from snakes. It can be used as a prosthetic human finger in the future. Right now, I am working on its controller. Firstly, I am simulating it in PyBullet to train first policy for its controller using Reinforcement learning. After the initial training, second stage would be in the actual env but with offline dataset.

|Github|

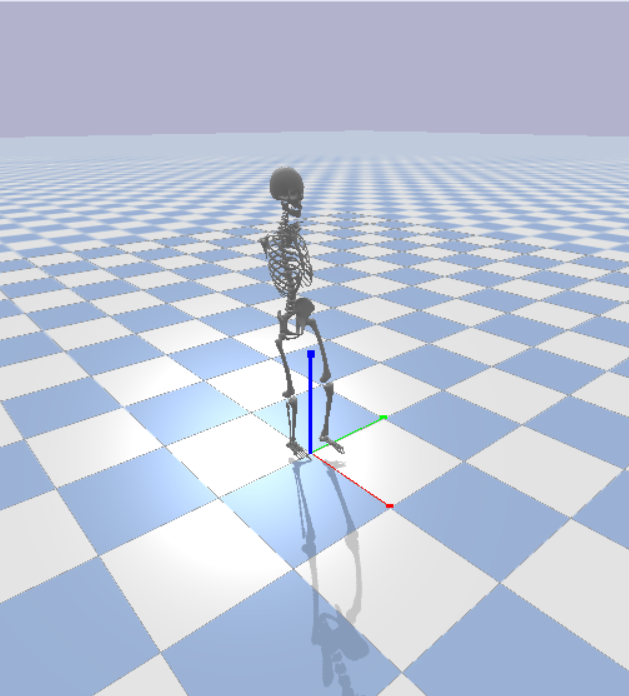

Skeleton Environment in PyBullet

I built an environment with PyBullet for 6 degrees of freedom Skeleton. You can use this env to make your own models or train a RL controller for your model. I made a gait cycle for ankle, knee, and hip angle in gait.py which you can use as the initial rule for training your model.

You can design any robot with any shape for any application, just follow the instructions in my GitHub repository.

|Github|

Mobile Robot Control with Deep Reinforcement Learning in Continuous Space with Obstacles (used td3)

In this project, a reinforcement learning algorithm was designed using a TD3 agent to control a mobile robot with the objective of reaching a defined goal state. The state space included the robot’s x and y position, orientation (theta), and its relative distances to the goal, providing the agent with sufficient information to navigate effectively. The action space consisted of the robot’s linear and angular velocities, both normalized between -1 and 1 to ensure stable and consistent control.

Through this setup, the robot was able to learn goal-directed behavior by interacting with its environment and receiving feedback from the reinforcement learning framework, demonstrating the potential of advanced algorithms for autonomous navigation tasks.

Forward Collision Warning System Using Stereo Vision

Using two camera modules, a stereo vision sensor was designed and implemented to generate accurate depth maps. The system processes visual data in real time to estimate the distance of surrounding objects, providing the foundation for advanced perception in autonomous and assisted driving technologies. By leveraging principles of computer vision, the setup enabled precise environmental mapping that goes beyond what a single camera can achieve.

To enhance safety, the system was further developed with an obstacle detection and collision-alert mechanism. Whenever nearby objects entered a critical range, the system issued a warning to the driver, reducing the risk of accidents. This project demonstrated the practical integration of AI and computer vision into real-world applications, combining technical innovation with a clear focus on improving human safety and machine interaction.

Human Pose Detection

Data was collected for four distinct human poses and used to train a variety of artificial neural networks in order to compare their performance. Each model was evaluated based on its ability to recognize and classify the poses accurately, providing insights into the effectiveness of different network architectures for human pose recognition.

To improve accuracy and reduce overfitting, several data augmentation techniques were applied to expand the dataset. These methods enhanced the diversity of training samples, enabling the networks to generalize better to unseen data and improving overall robustness of the system.

|Github|

Stabilizer Spoon

This project is designed to assist individuals who have limited or impaired hand control, such as those suffering from Parkinson’s Disease or other motor disorders. The system employs an Inertial Measurement Unit (IMU) sensor to accurately capture hand orientation and movement. Two servomotors are integrated to translate the detected movements into precise mechanical responses, enabling smoother and more controlled hand motions.

To build the physical framework of the system, custom plastic components were 3D-printed, ensuring a lightweight and ergonomic design that can comfortably interface with the user’s hand. One of the main challenges in the system was minimizing measurement and control noise, which can significantly affect performance. To address this, multiple noise reduction techniques were applied, including advanced filtering algorithms such as the Kalman filter, which effectively eliminated sensor and actuator noise, resulting in a more stable and reliable system.

Overall, this combination of sensors, actuators, and filtering techniques makes the device a practical tool for improving hand dexterity and enhancing the quality of life for individuals with motor impairments.

|Github|

Self-Balancing Robot with PID Controller

In this project, a two-wheeled robot was developed with a focus on maintaining stability and precise motion control. An ultrasonic sensor was employed to detect obstacles and measure distances in the robot’s environment, providing essential feedback for safe navigation. Two DC motors were used to drive the wheels, allowing controlled movement and maneuverability.

The system was powered and coordinated using an Arduino Uno microcontroller, which served as the central processing unit for reading sensor data and controlling motor outputs. To ensure the robot maintained balance and responded smoothly to disturbances, a Proportional-Integral-Derivative (PID) controller was implemented. This control strategy continuously adjusted the motor speeds based on real-time feedback, minimizing oscillations and improving overall stability.

Through the integration of sensors, actuators, and the PID control algorithm, the robot was able to achieve reliable two-wheeled balance, demonstrating effective real-time control and obstacle-aware navigation.

|Guthub|